Dahl.calvin.edu - HPC at Calvin

Calvin's efforts in high performance computing began in summer 1997,

when Prof. Joel Adams attended an NSF parallel computing workshop led by Chris Nevison and Nan Schaller at Colgate University.

There, he learned how to design parallel algorithms, how to implement them using the message passing interface (MPI), and how to install and use MPICH on a network of workstations (NOW).

In January 1998, Adams taught Calvin's first parallel computing course,

using MPI to run parallel programs using the NOW in Calvin's Unix Lab.

This let students gain experience with MPI, but they could not get good timing results, with so many different programs competing for the same CPUs.

Students in this course learned about parallel algorithms, software, and hardware platforms,

including a low-cost NASA-pioneered parallel architecture called the Beowulf cluster. Adams began to contemplate how to build a Beowulf cluster at Calvin.

|

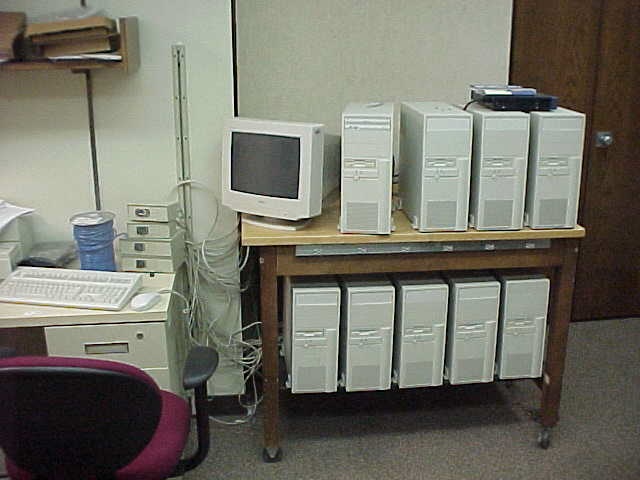

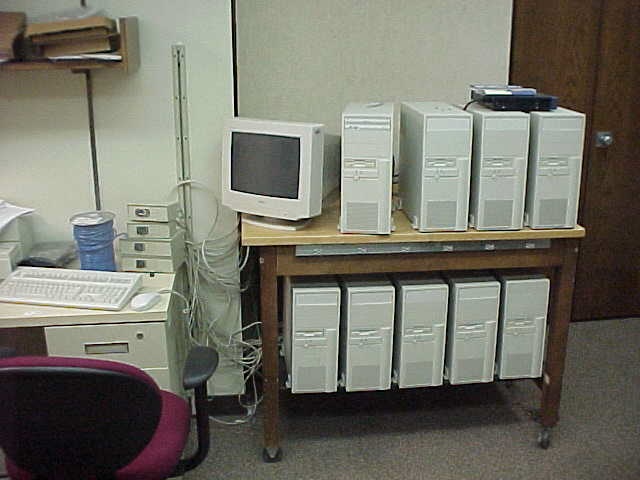

A junior in the course named Mark Ryken became very interested

Beowulf clusters, and decided to build one for his senior project. Mark worked for Calvin's IT department, and convinced them to donate a dozen old 486 PCs to the project, from which he was able to rebuild eight working PCs. Inspired by Loki, he used Fast (100 Mbps) Ethernet to connect these PCs in a star plus a 3D hypercube topology. Each PC received 4 Fast Ethernet cards: one linked to the switch and three linked to "neighbors" in the hypercube. In 1999, a 100 Mbps Ethernet switch and 32 network interface cards cost nearly $5,000, an expense that was covered by a Calvin College Science Division grant; Mark made his own patch cables to save money.

The resulting cluster, shown at the right, came to be known as MBH'99, which either stood for Mark's Beowulf Hypercube, or Mark's Big Headache, depending on how things were going a given day.

In 1999, all known Beowulf clusters were built by research scientists or graduate students, so MBH'99 was, to our knowledge, the first undergraduate Beowulf cluster. |

|

MBH'99 worked quite well as a proof-of-concept prototype system, but being based on 33 MHz 486 CPUs, it was too slow to provide anything in the way of actual high performance.

|

In 2000, Adams wrote an NSF-MRI proposal for a grant to build a dedicated Beowulf cluster at Calvin. This proposal was not funded, but the program officer encouraged him to revise and resubmit. He did so in 2001, and the proposal was funded with a grant of about $163,000.

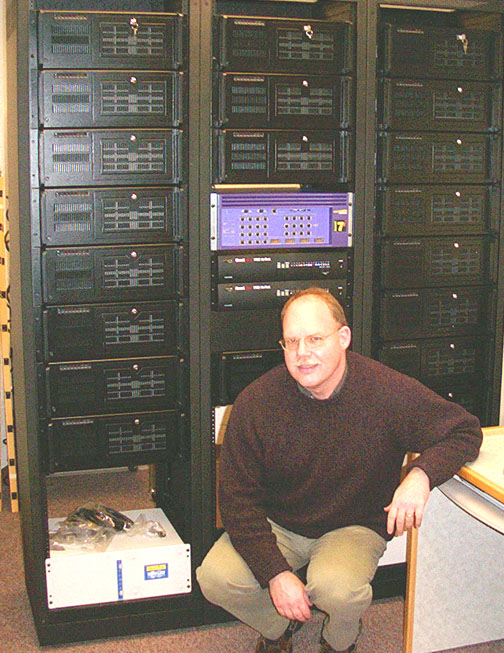

Adams named this new cluster Ohm, in honor of Georg Ohm, the famous German scientist. It was also a silly acronyn for our hypercube multiprocessor. Ohm was built by Adams and his students from raw CPUs, motherboards, RAM sticks, etc.

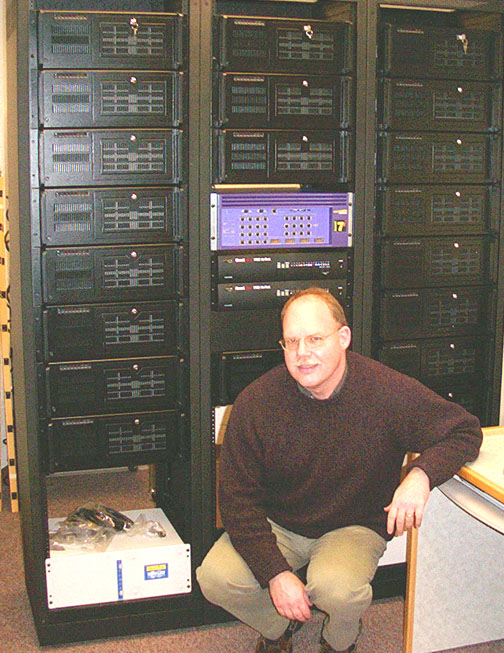

As can be seen in the picture to the left, Ohm consisted of three racks containing 16 compute nodes, 2 "master" nodes, a file server providing access to a 1.5 TB RAID array, and 3 dedicated UPS units. This arrangment permitted two students to simultaneously use 9 nodes without their computations interfering with one another; or a single researcher could use all 18 nodes.

These nodes were connected using Gigabit Ethernet, in a star plus 4D hypercube topology. Gigabit Ethernet was relatively expensive at the time -- each network interface card cost roughly $450, and the switch cost over $18,000.

Each of Ohm's nodes had a 1 GHz AMD Athlon CPU and 1 GB of RAM. Its theoretical top speed (Rpeak) was 18 Gflops; it's measured top speed (Rmax, via HP Linpack) was 12 Gflops.

Ohm was a multitopology cluster, meaning its topology could be changed on the fly between a star, a star+ring, or a star+hypercube. This flexibility permitted Adams and his students to conduct a price/performance analysis comparing these three Beowulf topologies. Adams and his students discovered that for a balanced or CPU-bound Beowulf cluster, the star topology was most cost-efficient. |

Many people contributed to the Ohm project, but a few stand out: Jeff Greenfield, Dave Vos, and Ryan VanderBijl helped assemble Ohm; Dave Vos was Ohm's first student administrator who did much of the system configuration; Kevin DeGraaf was Ohm's second student administrator, who wrote the scripts to change Ohm's topology "on the fly". Elliot Eshelman was Ohm's third student administrator, who wrote scripts to automate the price/performance analysis experiment. The late Dan Russcher was the fourth student administrator; Tim Brom was Ohm's fifth student administrator. Thanks to each of these people for their work on this project.

Each of Ohm's nodes originally had a local IBM Daystar (aka "Deathstar") disk. These disks had a failure rate exceeding 70%. After months of replacing disks, we jettisoned them and reconfigured Ohm's nodes to boot across the network.

|

Aside from these disk problems, Ohm provided highly reliable service at Calvin from 2001 until it was decommissioned in 2007.

During this time, Ohm provided a high performance computational modeling platform for Calvin's Biophysics, Chemistry, Computer Science, and Physics researchers. It also provided extensive research training for students in the Computer Science and Engineering departments, especially the students in Adams' course, which by this time had evolved into CS 374: High Performance Computing.

From January 2005 until June, Adams taught in Iceland at what is now the School of Engineering of Reykjavik University.

While there, he and his students built the six-node cluster shown to the right. Each node had an Intel Pentium-4 CPU and 2 GB of RAM. The six nodes were connected using Gigabit Ethernet.

Using HP Linpack, this cluster achieved 20.25 Gflops (Rmax), which was amazing performance for so modest a cluster. Adams students named this cluster Sleipnir, after Odin's many-legged horse. |

|

In summer 2005, Adams attended a cluster computing workshop at the National Computational Science Institute

at Oklahoma University, in search of computational science examples to add interest to his CS 374 course.

This workshop was led by Henry Neeman, Paul Gray, David Joiner, Tom Murphy, and Charlie Peck.

Adams gave a talk there on his Ohm research project, and saw Little Fe, a compact six-node Beowulf cluster.

In fall 2005, Adams gave a talk at the Oberlin Conference on Computation and Modeling (OCCAM). Little Fe was also being demonstrated at the conference. Student Tim Brom accompanied Adams and the two began to discuss the pros and cons of compact clusters like Little Fe. The pros included small size and portability, the main con was limited performance -- that version of Little Fe used 1 GHz CPUs and 100 Mbps Ethernet, making it a network-bound cluster.

|

In summer 2006, two important developments occurred:

- Motherboards began to appear with on-board Gigabit Ethernet

- Dual-core CPUs replaced single core CPUs as the new standard.

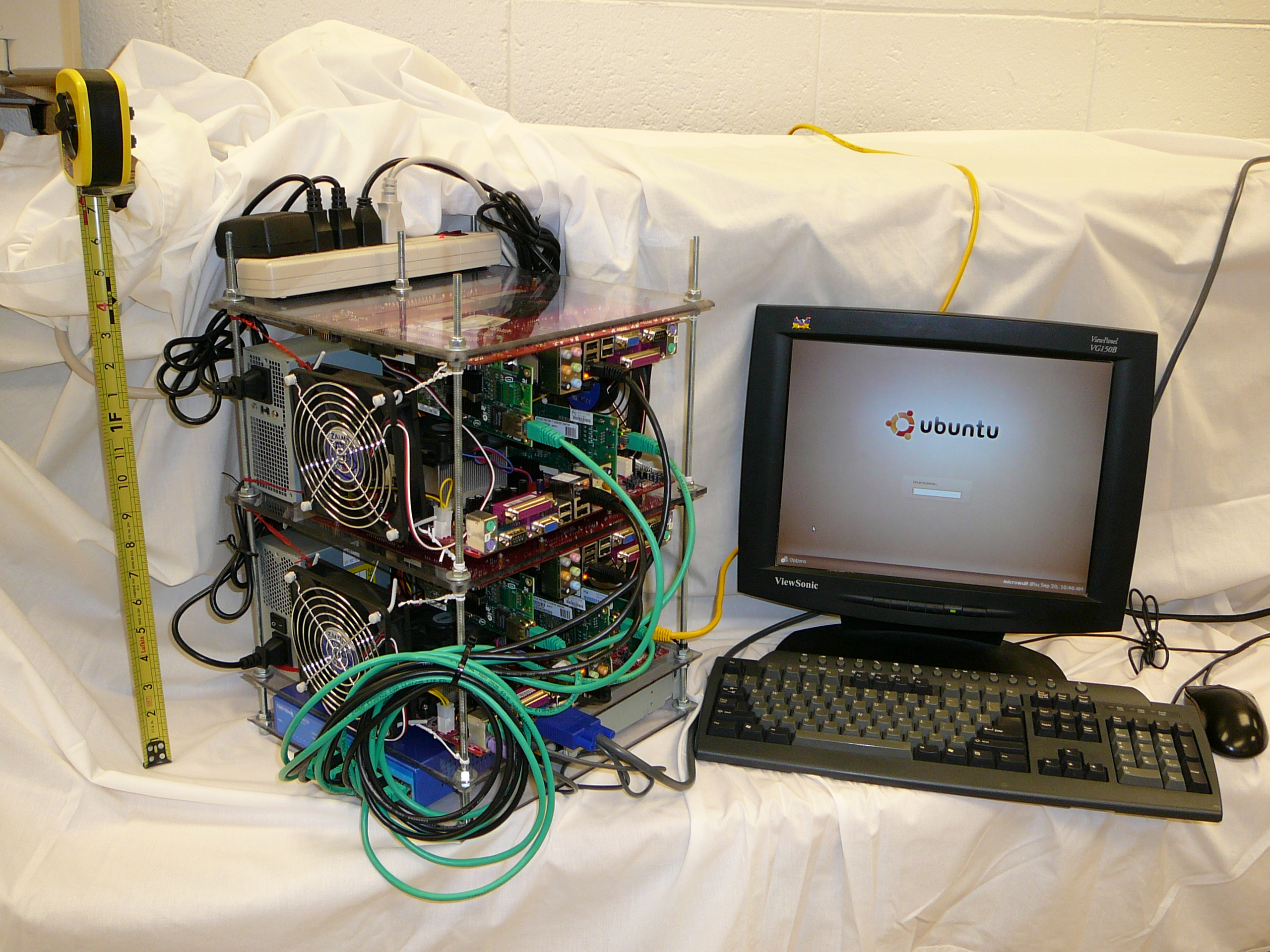

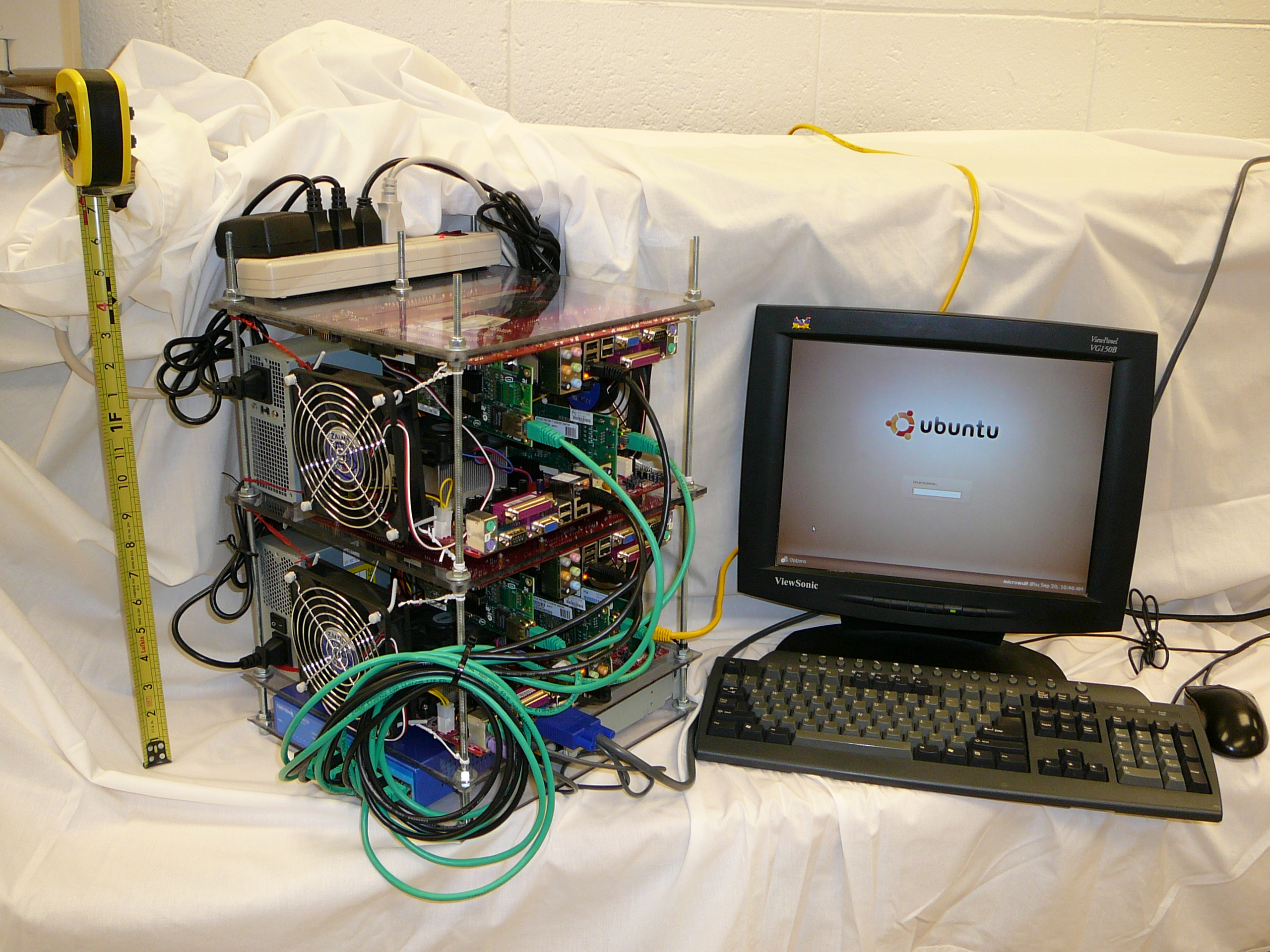

Together, these two developments made it feasible to build a compact, high performance cluster. Adams and Brom began designing such a cluster in fall 2006, and built it in spring 2007. Just as the microcomputer first made it possible for people to have their own desktop computers, Adams and Brom saw their 11" x 12" x 17" design as making it possible for people to have their own desktop Beowulf clusters. They dubbed their design a Microwulf, which is shown to the left.

This first Microwulf had four nodes, eight cores, eight MB of RAM, and a single 250 GB hard disk. The nodes were connected using an 8-port Gigabit Ethernet switch, and had a network adaptor for each core. |

Microwulf cost less than $2500 to build, yet achieved 26.25 Gflops of measured performance. This made it the first Beowulf cluster to break the $100/Gflop barrier. Within six months, the cost of its components had dropped about $1200, bringing its price/performance ratio to less than $50/Gflop. These kind of performance numbers drew world-wide attention (including the highest-ever number of visits to Calvin's website in a 24-hour period) and the Microwulf design has been widely emulated since then.

|

In the meantime, Ohm had begun showing its age, to the point that it was barely faster than some student's desktops.

In 2006, Adams had written another NSF proposal requesting a grant to replace Ohm.

This proposal was not funded, but the program officer encouraged Adams to revise and resubmit.

Adams did so in 2007, and this proposal was funded with a grant of about $206,000.

Adams designed Ohm's replacement during fall 2007. The new design called for a single rack of 45 nodes, each with at least two quad-core Xeon CPUs, 2 GB RAM per core, a Gigabit Ethernet network for administrative traffic, and an Infiniband network for data. The new cluster was dubbed Dahl, in honor of Ole Johan Dahl, the Norwegian computer scientist who co-invented the Simula programming language and object-oriented programming, two important milestones in computational modeling. Dahl was built in summer 2008, was put into use fall 2008, and has been operational ever since.

Dahl's Rpeak performance is 3.7 Tflops (trillion double-precision floating point instructions per second); we are still measuring its Rmax performance.

Gary Draving is Dahl's administrator; Kathy Hoogeboom was its first student administrator (summer 2008); Jon Walz was its second student administrator (summer 2009). |

|

Each of these projects was made possible by the generous support of the Calvin College Science Division. We also gratefully acknowledge the help and support of Dr. Victor Matthews of NFP Enterprises, 1456 10 Mile Rd NE, Comstock Park, MI 49321-9666.

This page maintained by

Joel Adams.